Uses Docker to run the Qdrant vector database with persistent storage and auto-restart capabilities, enabling vector search for document retrieval.

Supports GitHub Copilot agent mode in VS Code, allowing Copilot to automate Archive Agent operations through MCP tools for searching and retrieving information from indexed documents.

Offers tested support for Linux Mint, with installation scripts designed to work on this Ubuntu-derived distribution.

Allows interacting with a locally running Ollama instance for AI model processing, specifically configured to use models like llama3.1:8b for query processing and llava:7b-v1.6 for vision capabilities.

Provides integration with OpenAI's API for high-performance AI processing, using OpenAI's models for embedding, chunking, and querying operations on indexed documents.

Leverages Python environments for runtime operations, with specific version requirements (>= 3.10) and integration with Python packages for processing documents and images.

Integrates with spaCy's tokenizer model for advanced text processing and semantic chunking with context headers when processing documents.

Provides tested support for Ubuntu 24.04, with installation scripts specifically designed for Ubuntu-derived Linux distributions including Linux Mint.

Archive Agent

An intelligent file indexer with powerful AI search (RAG engine), automatic OCR, and a seamless MCP interface.

Archive Agent brings RAG to your command line and connects to your tools via MCP — it's not a chatbot.

Find what you need with natural language

- Unlock your documents with semantic AI search & query

- Files are split using semantic chunking with context headers and committed to a local database.

- RAG engine¹ uses reranking and expanding of retrieved chunks

¹ Retrieval Augmented Generation is the method of matching pre-made snippets of information to a query.

Natively index your documents on-device

- Includes local AI file system indexer

- Natively ingests PDFs, images, Markdown, plaintext, and more…

- Selects and tracks files using patterns like

~/Documents/*.pdf - Transcribes images using automatic OCR (experimental) and entity extraction

- Changes are automatically synced to a local Qdrant vector database.

Your AI, Your Choice

- Supports many AI providers and MCP

- OpenAI or compatible API ¹ for best performance

- Ollama and LM Studio for best privacy (local LLM)

- Integrates with your workflow** via a built-in MCP server.

¹ Includes xAI / Grok and Claude OpenAI compatible APIs.

Simply adjust the URL settings and overwrite OPENAI_API_KEY.

Scalable Performance

- Fully resumable parallel processing

- Processes multiple files at once using optimized multi-threading.

- Uses AI cache and generous request retry logic for all network requests.

- Leverages AI structured output with high-quality prompts and schemas.

Architecture

(If you can't see the diagram below, view it on Mermaid.live)

Just getting started?

Documentation

- Archive Agent

- Find what you need with natural language

- Natively index your documents on-device

- Your AI, Your Choice

- Scalable Performance

- Architecture

- Just getting started?

- Documentation

- Supported OS

- Install Archive Agent

- AI provider setup

- Which files are processed

- How files are processed

- OCR strategies

- How smart chunking works

- How chunk references work

- How chunks are retrieved

- How chunks are reranked and expanded

- How answers are generated

- How files are selected for tracking

- Run Archive Agent

- Quickstart on the command line (CLI)

- CLI command reference

- See list of commands

- Create or switch profile

- Open current profile config in nano

- Add included patterns

- Add excluded patterns

- Remove included / excluded patterns

- List included / excluded patterns

- Resolve patterns and track files

- List tracked files

- List changed files

- Commit changed files to database

- Combined track and commit

- Search your files

- Query your files

- Launch Archive Agent GUI

- Start MCP Server

- MCP Tools

- Update Archive Agent

- Qdrant database

- Developer's guide

- Tools

- Known issues

- Licensed under GNU GPL v3.0

- Collaborators welcome

- Learn about Archive Agent

Supported OS

Archive Agent has been tested with these configurations:

- Ubuntu 24.04 (PC x64)

- Ubuntu 22.04 (PC x64)

If you've successfully installed and tested Archive Agent with a different setup, please let me know and I'll add it here!

Install Archive Agent

Please install these requirements before proceeding:

Ubuntu / Linux Mint

This installation method should work on any Linux distribution derived from Ubuntu (e.g. Linux Mint).

To install Archive Agent in the current directory of your choice, run this once:

The install.sh script will execute the following steps:

- Download and install

uv(used for Python environment management) - Install the custom Python environment

- Install the

spaCymodel for natural language processing (pre-chunking) - Install

pandoc(used for document parsing) - Download and install the Qdrant docker image with persistent storage and auto-restart

- Install a global

archive-agentcommand for the current user

Archive Agent is now installed!

👉 Please complete the AI provider setup next.

(Afterward, you'll be ready to Run Archive Agent!)

AI provider setup

Archive Agent lets you choose between different AI providers:

- Remote APIs (higher performance and cost, less privacy):

- OpenAI: Requires an OpenAI API key.

- Local APIs (lower performance and cost, best privacy):

- Ollama: Requires Ollama running locally.

- LM Studio: Requires LM Studio running locally.

💡 Good to know: You will be prompted to choose an AI provider at startup; see: Run Archive Agent.

📌 Note: You can customize the specific models used by the AI provider in the Archive Agent settings. However, you cannot change the AI provider of an existing profile, as the embeddings will be incompatible; to choose a different AI provider, create a new profile instead.

OpenAI provider setup

If the OpenAI provider is selected, Archive Agent requires the OpenAI API key.

To export your OpenAI API key, replace sk-... with your actual key and run this once:

This will persist the export for the current user.

💡 Good to know: OpenAI won't use your data for training.

Ollama provider setup

If the Ollama provider is selected, Archive Agent requires Ollama running at http://localhost:11434.

With the default Archive Agent Settings, these Ollama models are expected to be installed:

💡 Good to know: Ollama also works without a GPU. At least 32 GiB RAM is recommended for smooth performance.

LM Studio provider setup

If the LM Studio provider is selected, Archive Agent requires LM Studio running at http://localhost:1234.

With the default Archive Agent Settings, these LM Studio models are expected to be installed:

💡 Good to know: LM Studio also works without a GPU. At least 32 GiB RAM is recommended for smooth performance.

Which files are processed

Archive Agent currently supports these file types:

- Text:

- Plaintext:

.txt,.md,.markdown - Documents:

- ASCII documents:

.html,.htm(images not supported) - Binary documents:

.odt,.docx(including images)

- ASCII documents:

- PDF documents:

.pdf(including images; see OCR strategies)

- Plaintext:

- Images:

.jpg,.jpeg,.png,.gif,.webp,.bmp

📌 Note: Images in HTML documents are currently not supported.

📌 Note: Legacy .doc files are currently not supported.

📌 Note: Unsupported files are tracked but not processed.

How files are processed

Ultimately, Archive Agent decodes everything to text like this:

- Plaintext files are decoded to UTF-8.

- Documents are converted to plaintext, images are extracted.

- PDF documents are decoded according to the OCR strategy.

- Images are decoded to text using AI vision.

- Uses OCR, entity extraction, or both combined (default).

- The vision model will reject unintelligible images.

- Entity extraction extracts structured information from images.

- Structured information is formatted as image description.

See Archive Agent settings: image_ocr, image_entity_extract

Archive Agent processes files with optimized performance:

- Surgical Synchronization:

- PDF analyzing phase is serialized (due to PyMuPDF threading limitations).

- All other phases (vision, chunking, embedding) run in parallel for maximum performance.

- Vision operations are parallelized across images and pages within and across files.

- Embedding operations are parallelized across text chunks and files.

- Smart chunking uses sequential processing due to carry mechanism dependencies.

See Archive Agent settings: max_workers_ingest, max_workers_vision, max_workers_embed

OCR strategies

For PDF documents, there are different OCR strategies supported by Archive Agent:

strictOCR strategy (recommended):- PDF OCR text layer is ignored.

- PDF pages are treated as images and processed with OCR only.

- Expensive and slow, but more accurate.

relaxedOCR strategy:- PDF OCR text layer is extracted.

- PDF foreground images are decoded with OCR, but background images are ignored.

- Cheap and fast, but less accurate.

autoOCR strategy:- Attempts to select the best OCR strategy for each page, based on the number of characters extracted from the PDF OCR text layer, if any.

- Decides based on

ocr_auto_threshold, the minimum number of characters forautoOCR strategy to resolve torelaxedinstead ofstrict. - Trade-off between cost, speed, and accuracy.

⚠️ Warning: The auto OCR strategy is still experimental.

PDF documents often contain small/scattered images related to page style/layout which cause overhead while contributing little information or even cluttering the result.

💡 Good to know: You will be prompted to choose an OCR strategy at startup (see Run Archive Agent).

How smart chunking works

Archive Agent processes decoded text like this:

- Decoded text is sanitized and split into sentences.

- Sentences are grouped into reasonably-sized blocks.

- Each block is split into smaller chunks using an AI model.

- Block boundaries are handled gracefully (last chunk carries over).

- Each chunk is prefixed with a context header (improves search).

- Each chunk is turned into a vector using AI embeddings.

- Each vector is turned into a point with file metadata.

- Each point is stored in the Qdrant database.

See Archive Agent settings: chunk_lines_block, chunk_words_target

💡 Good to know: This smart chunking improves the accuracy and effectiveness of the retrieval.

📌 Note: Splitting into sentences may take some time for huge documents. There is currently no possibility to show the progress of this step.

How chunk references work

To ensure that every chunk can be traced back to its origin, Archive Agent maps the text contents of each chunk to the corresponding line numbers or page numbers of the source file.

- Line-based files (e.g.,

.txt) use the range of line numbers as reference. - Page-based files (e.g.,

.pdf) use the range of page numbers as reference.

📌 Note: References are only approximate due to paragraph/sentence splitting/joining in the chunking process.

How chunks are retrieved

Archive Agent retrieves chunks related to your question like this:

- The question is turned into a vector using AI embeddings.

- Points with similar vectors are retrieved from the Qdrant database.

- Only chunks of points with sufficient score are kept.

See Archive Agent settings: retrieve_score_min, retrieve_chunks_max

How chunks are reranked and expanded

Archive Agent filters the retrieved chunks .

- Retrieved chunks are reranked by relevance to your question.

- Only the top relevant chunks are kept (the other chunks are discarded).

- Each selected chunk is expanded to get a larger context from the relevant documents.

See Archive Agent settings: rerank_chunks_max, expand_chunks_radius

How answers are generated

Archive Agent answers your question using the reranked and expanded chunks like this:

- The LLM receives the chunks as context to the question.

- LLM's answer is returned as structured output and formatted.

💡 Good to know: Archive Agent uses an answer template that aims to be universally helpful.

How files are selected for tracking

Archive Agent uses patterns to select your files:

- Patterns can be actual file paths.

- Patterns can be paths containing wildcards that resolve to actual file paths.

- 💡 Patterns must be specified as (or resolve to) absolute paths, e.g.

/home/user/Documents/*.txt(or~/Documents/*.txt). - 💡 Use the wildcard

*to match any file in the given directory. - 💡 Use the wildcard

**to match any files and zero or more directories, subdirectories, and symbolic links to directories.

There are included patterns and excluded patterns:

- The set of resolved excluded files is removed from the set of resolved included files.

- Only the remaining set of files (included but not excluded) is tracked by Archive Agent.

- Hidden files are always ignored!

This approach gives you the best control over the specific files or file types to track.

Run Archive Agent

💡 Good to know: At startup, you will be prompted to choose the following:

- Profile name

- AI provider (see AI Provider Setup)

- OCR strategy (see OCR strategies)

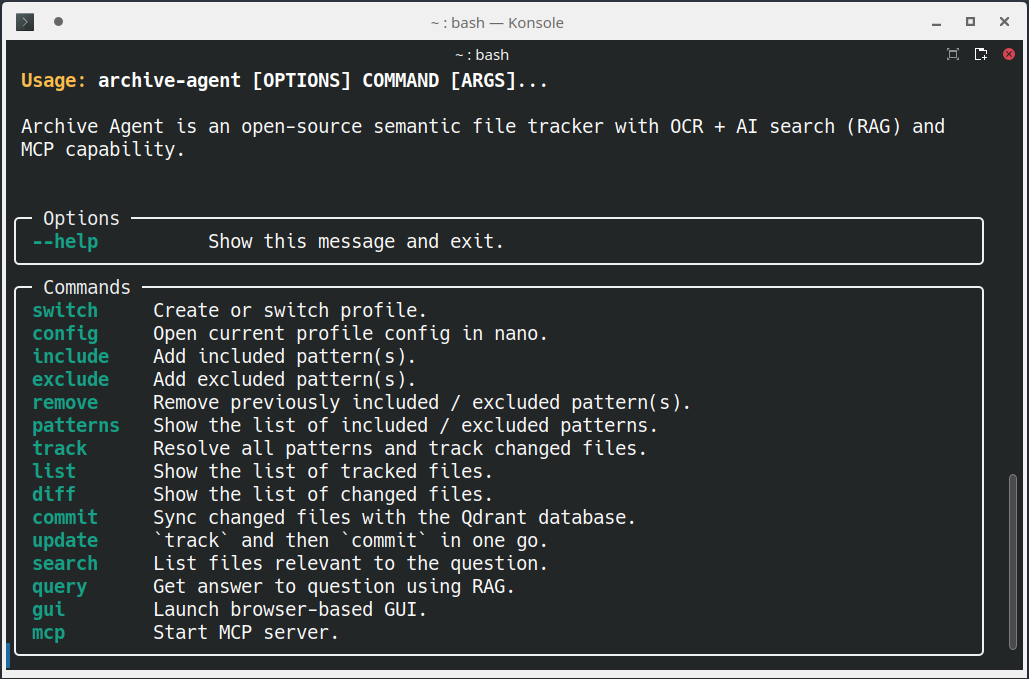

Screenshot of command-line interface (CLI):

Quickstart on the command line (CLI)

For example, to track your documents and images, run this:

To start the GUI, run this:

Or, to ask questions from the command line:

CLI command reference

See list of commands

To see the list of supported commands, run this:

Create or switch profile

To switch to a new or existing profile, run this:

📌 Note: Always use quotes for the profile name argument, or skip it to get an interactive prompt.

💡 Good to know: Profiles are useful to manage independent Qdrant collections (see Qdrant database) and Archive Agent settings.

Open current profile config in nano

To open the current profile's config (JSON) in the nano editor, run this:

See Archive Agent settings for details.

Add included patterns

To add one or more included patterns, run this:

📌 Note: Always use quotes for the pattern argument (to prevent your shell's wildcard expansion), or skip it to get an interactive prompt.

Add excluded patterns

To add one or more excluded patterns, run this:

📌 Note: Always use quotes for the pattern argument (to prevent your shell's wildcard expansion), or skip it to get an interactive prompt.

Remove included / excluded patterns

To remove one or more previously included / excluded patterns, run this:

📌 Note: Always use quotes for the pattern argument (to prevent your shell's wildcard expansion), or skip it to get an interactive prompt.

List included / excluded patterns

To see the list of included / excluded patterns, run this:

Resolve patterns and track files

To resolve all patterns and track changes to your files, run this:

List tracked files

To see the list of tracked files, run this:

📌 Note: Don't forget to track your files first.

List changed files

To see the list of changed files, run this:

📌 Note: Don't forget to track your files first.

Commit changed files to database

To sync changes to your files with the Qdrant database, run this:

To see additional information (vision, chunking, embedding), pass the --verbose option.

To bypass the AI cache (vision, chunking, embedding) for this commit, pass the --nocache option.

💡 Good to know: Changes are triggered by:

- File added

- File removed

- File changed:

- Different file size

- Different modification date

The Qdrant database is updated after all files have been ingested.

📌 Note: Don't forget to track your files first.

Combined track and commit

To track and then commit in one go, run this:

To see additional information (vision, chunking, embedding), pass the --verbose option.

To bypass the AI cache (vision, chunking, embedding) for this commit, pass the --nocache option.

Search your files

Lists files relevant to the question.

📌 Note: Always use quotes for the question argument, or skip it to get an interactive prompt.

To see additional information (embedding, retrieval, reranking), pass the --verbose option.

To bypass the AI cache (embedding, reranking) for this search, pass the --nocache option.

Query your files

Answers your question using RAG.

📌 Note: Always use quotes for the question argument, or skip it to get an interactive prompt.

To see additional information (embedding, retrieval, reranking, querying), pass the --verbose option.

To bypass the AI cache (embedding, reranking) for this query, pass the --nocache option.

To save the query results to a JSON file, use either:

--to-jsonwith a specific filename:

--to-json-autoto auto-generate a clean filename from the question (max 160 chars, truncated with[...]if needed):

💡 Good to know: The JSON output follows the QuerySchema format defined in AiQuery.py.

Launch Archive Agent GUI

To launch the Archive Agent GUI in your browser, run this:

To see additional information (embedding, retrieval, reranking, querying), pass the --verbose option.

To bypass the AI cache (embedding, reranking) for this query, pass the --nocache option.

📌 Note: Press CTRL+C in the console to close the GUI server.

Start MCP Server

To start the Archive Agent MCP server, run this:

To see additional information (embedding, retrieval, reranking, querying), pass the --verbose option.

To bypass the AI cache (embedding, reranking) for this query, pass the --nocache option.

📌 Note: Press CTRL+C in the console to close the MCP server.

💡 Good to know: Use these MCP configurations to let your IDE or AI extension automate Archive Agent:

.vscode/mcp.jsonfor GitHub Copilot agent mode (VS Code):.roo/mcp.jsonfor Roo Code (VS Code extension)

MCP Tools

Archive Agent exposes these tools via MCP:

| MCP tool | Equivalent CLI command(s) | Argument(s) | Implementation | Description |

|---|---|---|---|---|

get_patterns | patterns | None | Synchronous | Get the list of included / excluded patterns. |

get_files_tracked | track and then list | None | Synchronous | Get the list of tracked files. |

get_files_changed | track and then diff | None | Synchronous | Get the list of changed files. |

get_search_result | search | question | Asynchronous | Get the list of files relevant to the question. |

get_answer_rag | query | question | Asynchronous | Get answer to question using RAG. |

📌 Note: These commands are read-only, preventing the AI from changing your Qdrant database.

💡 Good to know: Just type #get_answer_rag (e.g.) in your IDE or AI extension to call the tool directly.

💡 Good to know: The #get_answer_rag output follows the QuerySchema format defined in AiQuery.py.

Update Archive Agent

This step is not immediately needed if you just installed Archive Agent. However, to get the latest features, you should update your installation regularly.

To update your Archive Agent installation, run this in the installation directory:

📌 Note: If updating doesn't work, try removing the installation directory and then Install Archive Agent again. Your config and data are safely stored in another place; see Archive Agent settings and Qdrant database for details.

💡 Good to know: To also update the Qdrant docker image, run this:

Archive Agent settings

Archive Agent settings are organized as profile folders in ~/.archive-agent-settings/.

E.g., the default profile is located in ~/.archive-agent-settings/default/.

The currently used profile is stored in ~/.archive-agent-settings/profile.json.

📌 Note: To delete a profile, simply delete the profile folder. This will not delete the Qdrant collection (see Qdrant database).

Profile configuration

The profile configuration is contained in the profile folder as config.json.

💡 Good to know: Use the config CLI command to open the current profile's config (JSON) in the nano editor (see Open current profile config in nano).

💡 Good to know: Use the switch CLI command to switch to a new or existing profile (see Create or switch profile).

| Key | Description |

|---|---|

config_version | Config version |

mcp_server_host | MCP server host (default http://127.0.0.1; set to http://0.0.0.0 to expose in LAN) |

mcp_server_port | MCP server port (default 8008) |

ocr_strategy | OCR strategy in DecoderSettings.py |

ocr_auto_threshold | Minimum number of characters for auto OCR strategy to resolve to relaxed instead of strict |

image_ocr | Image handling: true enables OCR, false disables it. |

image_entity_extract | Image handling: true enables entity extraction, false disables it. |

chunk_lines_block | Number of lines per block for chunking |

chunk_words_target | Target number of words per chunk |

qdrant_server_url | URL of the Qdrant server |

qdrant_collection | Name of the Qdrant collection |

retrieve_score_min | Minimum similarity score of retrieved chunks (0...1) |

retrieve_chunks_max | Maximum number of retrieved chunks |

rerank_chunks_max | Number of top chunks to keep after reranking |

expand_chunks_radius | Number of preceding and following chunks to prepend and append to each reranked chunk |

max_workers_ingest | Maximum number of files to process in parallel, creating one thread for each file |

max_workers_vision | Maxmimum number of parallel vision requests per file, creating one thread per request |

max_workers_embed | Maxmimum number of parallel embedding requests per file, creating one thread per request |

ai_provider | AI provider in ai_provider_registry.py |

ai_server_url | AI server URL |

ai_model_chunk | AI model used for chunking |

ai_model_embed | AI model used for embedding |

ai_model_rerank | AI model used for reranking |

ai_model_query | AI model used for queries |

ai_model_vision | AI model used for vision ("" disables vision) |

ai_vector_size | Vector size of embeddings (used for Qdrant collection) |

ai_temperature_query | Temperature of the query model |

📌 Note: Since max_workers_vision and max_workers_embed requests are processed in parallel per file,

and max_workers_ingest files are processed in parallel, the total number of requests multiplies quickly.

Adjust according to your system resources and in alignment with your AI provider's rate limits.

Watchlist

The profile watchlist is contained in the profile folder as watchlist.json.

The watchlist is managed by these commands only:

include/exclude/removetrack/commit/update

AI cache

Each profile folder also contains an ai_cache folder.

The AI cache ensures that, in a given profile:

- The same image is only OCR-ed once.

- The same text is only chunked once.

- The same text is only embedded once.

- The same combination of chunks is only reranked once.

This way, Archive Agent can quickly resume where it left off if a commit was interrupted.

To bypass the AI cache for a single commit, pass the --nocache option to the commit or update command

(see Commit changed files to database and Combined track and commit).

💡 Good to know: Queries are never cached, so you always get a fresh answer.

📌 Note: To clear the entire AI cache, simply delete the profile's cache folder.

📌 Technical Note: Archive Agent keys the cache using a composite hash made from the text/image bytes, and of the AI model names for chunking, embedding, reranking, and vision. Cache keys are deterministic and change generated whenever you change the chunking, embedding or vision AI model names. Since cache entries are retained forever, switching back to a prior combination of AI model names will again access the "old" keys.

Qdrant database

The Qdrant database is stored in ~/.archive-agent-qdrant-storage/.

📌 Note: This folder is created by the Qdrant Docker image running as root.

💡 Good to know: Visit your Qdrant dashboard to manage collections and snapshots.

Developer's guide

Archive Agent was written from scratch for educational purposes (on either end of the software).

💡 Good to know: Tracking the test_data/ gets you started with some kind of test data.

Important modules

To get started, check out these epic modules:

- Files are processed in

archive_agent/data/FileData.py - The app context is initialized in

archive_agent/core/ContextManager.py - The default config is defined in

archive_agent/config/ConfigManager.py - The CLI commands are defined in

archive_agent/__main__.py - The commit logic is implemented in

archive_agent/core/CommitManager.py - The CLI verbosity is handled in

archive_agent/util/CliManager.py - The GUI is implemented in

archive_agent/core/GuiManager.py - The AI API prompts for chunking, embedding, vision, and querying are defined in

archive_agent/ai/AiManager.py - The AI provider registry is located in

archive_agent/ai_provider/ai_provider_registry.py

If you miss something or spot bad patterns, feel free to contribute and refactor!

Network and Retry Handling

Archive Agent implements comprehensive retry logic with exponential backoff to handle transient failures:

- AI Provider Operations: 10 retries with exponential backoff (max 60s delay) for network timeouts and API errors.

- Database Operations: 10 retries with exponential backoff (max 10s delay) for Qdrant connection issues .

- Schema Validation: Additional 10 linear retry attempts for AI response parsing failures with cache invalidation.

- Dual-Layer Strategy: Network-level retries handle infrastructure failures, while schema-level retries handle AI response quality issues.

This robust retry system ensures reliable operation even with unstable network conditions or intermittent service issues.

Code testing and analysis

To run unit tests, check types, and check style, run this:

Run Qdrant with in-memory storage

To run Qdrant with in-memory storage (e.g., in OpenAI Codex environment where Docker is not available),

export this environment variable before running install.sh and archive-agent:

- The environment variable is checked by

install.shto skipmanage-qdrant.sh. - The environment variable is checked by

QdrantManager.pyto ignore server URL and use in-memory storage instead.

📌 Note: Qdrant in-memory storage is volatile (not persisted to disk).

Tools

Rename file paths in chunk metadata

To bulk-rename file paths in chunk metadata in the currently active Qdrant collection, run this:

Useful after moving files or renaming folders when you don't want to run the update command again.

📌 Note:

- This tool modifies the Qdrant database directly — ensure you have backups if working with critical data.

- This tool will not update the tracked files. You need to update your watchlist (see Archive Agent settings) using manual search and replace.

Remove file paths from context headers

Archive Agent < v11.0.0 included file paths in the chunk context headers; this was a bad design decision that led to skewed retrieval.

To bulk-remove all file paths in context headers in the currently active Qdrant collection, run this:

📌 Note:

- This tool modifies the Qdrant database directly — ensure you have backups if working with critical data.

Known issues

- While

trackinitially reports a file as added, subsequenttrackcalls report it as changed. - Removing and restoring a tracked file in the tracking phase is currently not handled properly:

- Removing a tracked file sets

{size=0, mtime=0, diff=removed}. - Restoring a tracked file sets

{size=X, mtime=Y, diff=added}. - Because

sizeandmtimewere cleared, we lost the information to detect a restored file.

- Removing a tracked file sets

- AI vision is employed on empty images as well, even though they could be easily detected locally and skipped.

- PDF vector images may not convert as expected, due to missing tests. (Using

strictOCR strategy would certainly help in the meantime.) - Binary document page numbers (e.g.,

.docx) are not supported yet; Microsoft Word document support is experimental. - References are only approximate due to paragraph/sentence splitting/joining in the chunking process.

- AI cache does not handle

AiResultschema migration yet. (If you encounter errors, passing the--nocacheflag or deleting all AI cache folders would certainly help in the meantime.) - Rejected images (e.g., due to OpenAI content filter policy violation) from PDF pages in

strictOCR mode are currently left empty instead of resorting to text extracted from PDF OCR layer (if any). - The spaCy model

en_core_web_mdused for sentence splitting is only suitable for English source text. Multilingual support is missing at the moment. - HTML document images are not supported.

- The behaviour of handling unprocessable files is not customizable yet. Should the user be prompted? Should the entire file be rejected? Unprocessable images are currently tolerated and replaced by

[Unprocessable image].

Licensed under GNU GPL v3.0

Copyright © 2025 Dr.-Ing. Paul Wilhelm <paul@wilhelm.dev>

See LICENSE for details.

Collaborators welcome

You are invited to contribute to this open source project! Feel free to file issues and submit pull requests anytime.

Learn about Archive Agent

This server cannot be installed

hybrid server

The server is able to function both locally and remotely, depending on the configuration or use case.

Archive Agent is an open-source semantic file tracker with OCR + AI search (RAG) and MCP capability.

- Find what you need with natural language

- Natively index your documents on-device

- Your AI, Your Choice

- Scalable Performance

- Architecture

- Just getting started?

- Documentation

- Supported OS

- Install Archive Agent

- AI provider setup

- Which files are processed

- How files are processed

- OCR strategies

- How smart chunking works

- How chunk references work

- How chunks are retrieved

- How chunks are reranked and expanded

- How answers are generated

- How files are selected for tracking

- Run Archive Agent

- Quickstart on the command line (CLI)

- CLI command reference

- See list of commands

- Create or switch profile

- Open current profile config in nano

- Add included patterns

- Add excluded patterns

- Remove included / excluded patterns

- List included / excluded patterns

- Resolve patterns and track files

- List tracked files

- List changed files

- Commit changed files to database

- Combined track and commit

- Search your files

- Query your files

- Launch Archive Agent GUI

- Start MCP Server

- MCP Tools

- Update Archive Agent

- Qdrant database

- Developer's guide

- Tools

- Known issues

- Licensed under GNU GPL v3.0

- Collaborators welcome

- Learn about Archive Agent

Related MCP Servers

- -securityAlicense-qualityAn agent-based tool that provides web search and advanced research capabilities including document analysis, image description, and YouTube transcript retrieval.Last updated -12Apache 2.0

- AsecurityAlicenseAqualityA fully featured coding agent that uses symbolic operations (enabled by language servers) and works well even in large code bases. Essentially a free to use alternative to Cursor and Windsurf Agents, Cline, Roo Code and others.Last updated -2512,748MIT License

- -securityAlicense-qualityAn open-source self-hosted browser agent that provides a dockerized browser environment for AI automation, allowing other AI apps and agents to perform human-like web browsing tasks through natural language instructions.Last updated -6661Apache 2.0

- -securityFlicense-qualityAn Agent Framework Documentation server that enables AI agents to efficiently retrieve information from documentation databases using hybrid semantic and keyword search for seamless agent integration.Last updated -